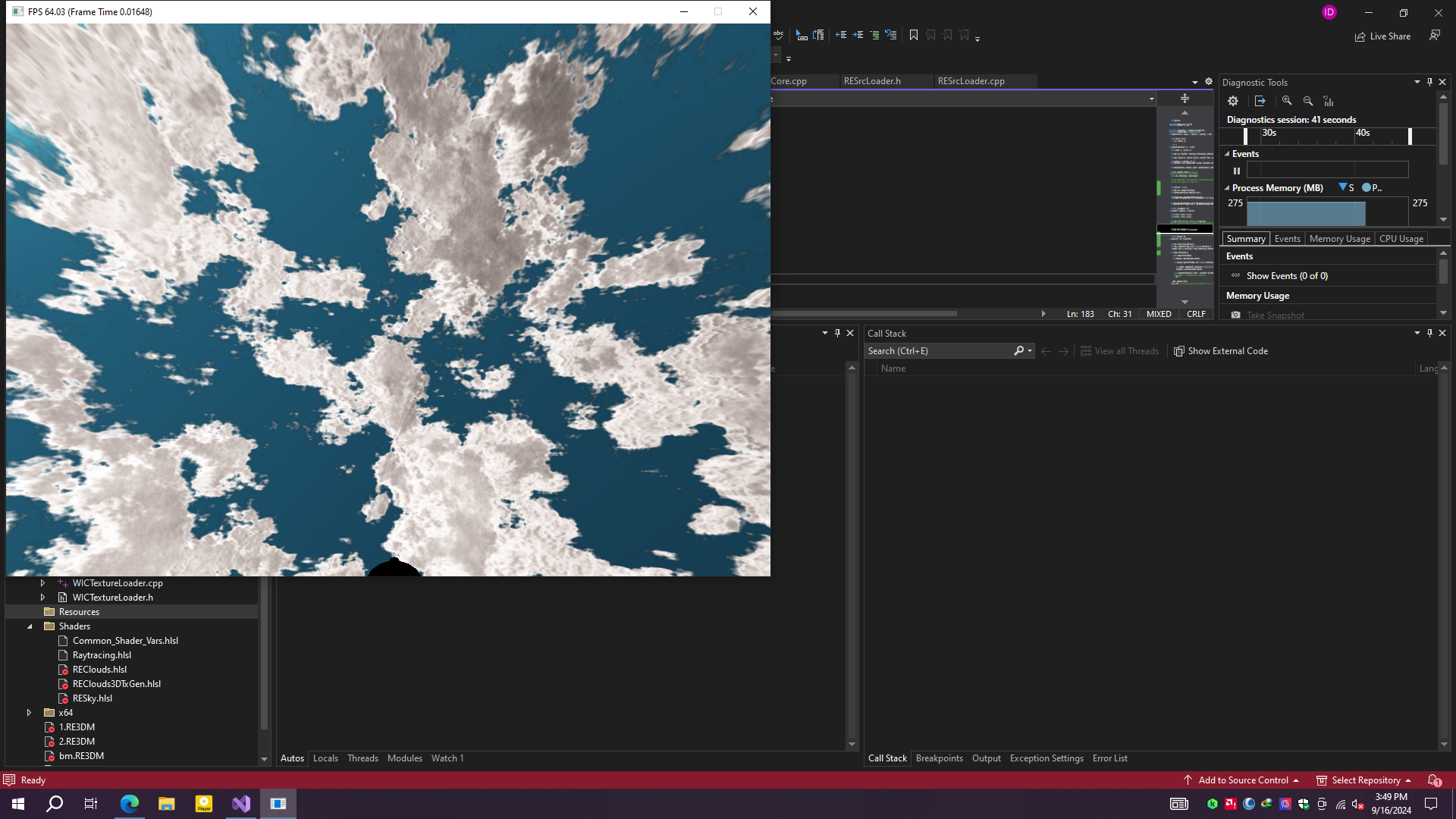

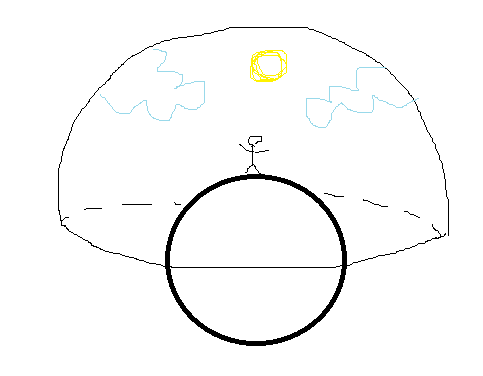

Hi, I'm trying to generate clouds based on The Real-Time Volumetric Cloudscapes of Horizon Zero Dawn. But I'm facing the problem on how to sample Shape(3D tex, 128*128*128), Detail(3D tex, 32*32*32) and Weathermap (2D tex, 1024*1024) correctly. Here's my implementation, could anyone give me hints to resolve it?

float3 GetUVWMap1(in float3 worldPos)

{

float3 uvw;

float radius = length(worldPos)-InnerRadius;

float theta = atan2(worldPos.y, worldPos.x); // azimuthal angle

float phi = acos(worldPos.z / radius); // polar angle

// Normalize spherical coordinates to [0, 1] range for UVW mapping

float u = theta / (2.0f * PI);

float v = phi / (PI * 0.5f);

float w = radius / (inMinMaxCloudLayer.y - inMinMaxCloudLayer.x);

return float3(u, v, w);

}

float2 GetUVMap2(in float3 worldPos)

{

float3 pos = normalize(worldPos - float3(g_sceneCB.CameraPos.x, -(g_sceneCB.CameraPos.y + InnerRadius), g_sceneCB.CameraPos.z));

float r = atan2(sqrt(pos.x * pos.x + pos.z * pos.z), pos.y) / (PI);

float phi = atan2(pos.z, pos.x);

float2 coord = float2(r * cos(phi) + 0.5f, r * sin(phi) + 0.5f);

return coord;

}

float3 SampleWeather(in float3 inPos)

{

float3 wind_direction = float3(1,0,0);

float cloud_speed = 500000;

float cloud_offset = 700000;

float height_fraction = GetHeightFractionForPoint(inPos);

inPos += height_fraction * wind_direction * cloud_offset;

inPos += wind_direction * cloud_speed * g_sceneCB.mTime;

return txWeatherMap.SampleLevel(ssClouds, GetUVMap2(inPos), 0).rgb * 2;

}

float SampleCloudDensity(float3 inP, float3 inWeatherData)

{

float3 coord = GetUVWMap1(inP);

float4 low_frequency_noises = txShape.SampleLevel(ssClouds, coord, 0).rgba;

float low_freq_fbm = (low_frequency_noises.g * 0.625f) + (low_frequency_noises.b * 0.25f) + (low_frequency_noises.a * 0.125f);

float base_cloud = Remap(low_frequency_noises.r, -(1.0f - low_freq_fbm), 1.0f, 0.0f, 1.0f);

float density_height_gradient = GetDensityHeightGradientForPoint(inP, inWeatherData);

base_cloud *= density_height_gradient;

float cloud_coverage = inWeatherData.r;

float base_cloud_with_coverage = Remap(base_cloud, cloud_coverage, 1.0f, 0.0f, 1.0f);

base_cloud_with_coverage *= cloud_coverage;

float3 high_frequency_noises = txDetail.SampleLevel(ssClouds, coord * 0.1f, 0).rgb;

float high_freq_fbm = (high_frequency_noises.r * 0.625f) + (high_frequency_noises.g * 0.25f) + (high_frequency_noises.b * 0.125f);

float height_fraction = GetHeightFractionForPoint(inP);

float high_freq_noise_modifier = lerp(high_freq_fbm, (1.0f - high_freq_fbm), saturate(height_fraction * 10.0f));

float final_cloud = Remap(base_cloud_with_coverage, high_freq_noise_modifier * 0.2f, 1.0f, 0.0f, 1.0f);

return saturate(final_cloud);

}

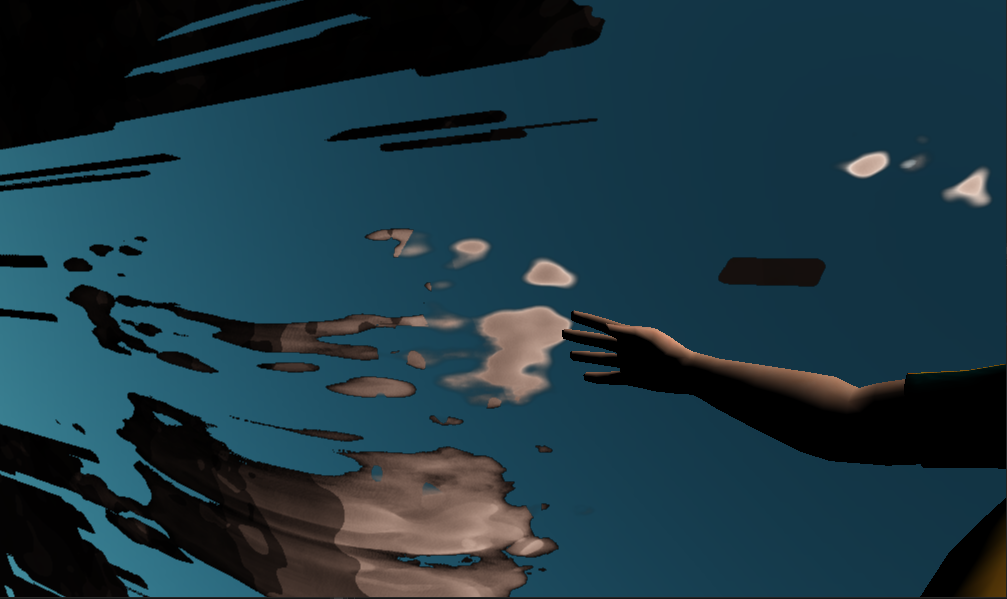

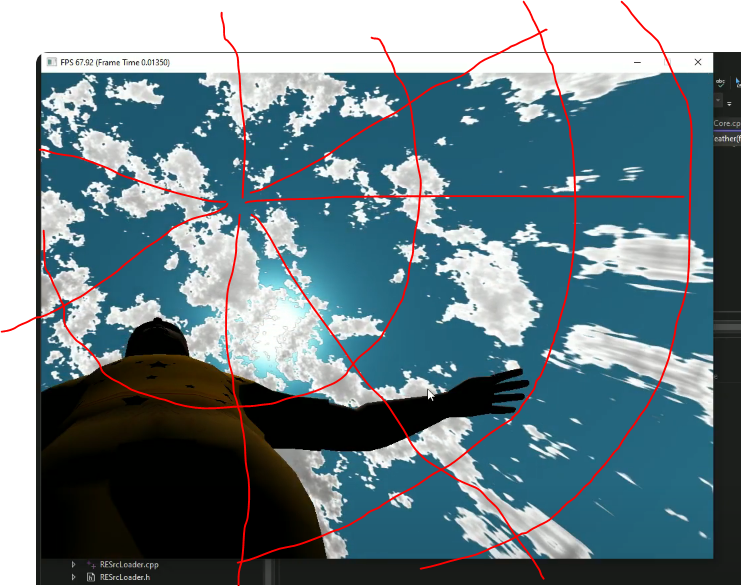

NO MATTER WHAT I DO, THIS DISTORTION HAPPENS