Hello,

I'm experiencing issues with my tilemap-shader, on a wide range of "Intel HD"-GPU chipsets. The issue was introduced with, and seems to stem from me packing the coordinates of the tilemap-lookup in one float in a texture. The algorithm:

const auto makePackedTile = [&](uint16_t offX, uint16_t offY, uint8_t autotileId)

{

AE_ASSERT(autotileId <= 8);

uint32_t packed = autotileId;

AE_ASSERT(offX <= 512);

AE_ASSERT(offY <= 512);

packed |= offX << 4;

packed |= offY << 18;

AE_ASSERT((packed & 0x0F) == autotileId);

AE_ASSERT(((packed >> 4) & 0x3fff) == offX);

AE_ASSERT((packed >> 18) == offY);

return std::bit_cast<float>(packed);

};As validated by the asserts at the end, this should theoretically yield the correct results (and it does so on any other GPU). This is being written to a 32-bit floating point two-component texture (RGF32), with an additional z-value in the other component. The shader-code for reading the data:

int2 calculateTileOffsets(uint packedTile, float autotileData[9])

{

uint autotileId = packedTile & 0x0F;

int2 vOffset;

vOffset.x = (packedTile >> 4) & 0x3fff;

vOffset.y = autotileData[autotileId];

vOffset.y += packedTile >> 18;

return vOffset;

}

// usage:

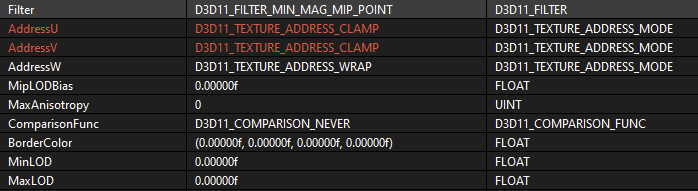

float2 tile = Tilemap.SampleLevel( PointClamp, float3(In[0].vPos.zw / vTileHeight.yz,(Out.layer + 0.5f)/numRealLayers), 0);

uint packedTile = asuint(tile.x);

if (packedTile != -1)

{

Out.vPos.z = 1.0f - (tile.y + curr * 0.0000001f);

float2 vTex0 = calculateTileOffsets(packedTile, autotileData) * vTileHeight.wx;I've been able to track the problem down to that function and the data extracted from “packedTile”. If I replace the evaluation of vOffset.x/y with static values, those tiles are then rendered. Also, when I write arbitrary float-values into the texture, and use them in place of “tile.x”, the result is the same, meaning the sample of the actual texture should work. Also, I've checked that the state being bound and the content of all the textures and cbuffers are the same on the PC where the shader works, and the one where it doesn't. Just in case you wonder, here's the complete shader (which uses a geometry-shader):

#pragma pack_matrix( row_major )

#pragma ruledisable 0x0802405f

cbuffer Instance: register(b3)

{

float4 vOffset;

float4 vTileOffset;

float tileSize;

float4 vInvTileSize;

float4 vTileHeight;

float3 vLayers;

float activeLayer;

float4 vInvTilesetSize;

float4 vColorTone;

};

cbuffer Custom0: register(b4)

{

float autotileData[9];

};

Texture3D Tilemap: register(t0);

Texture2D Image: register(t1);

sampler PointClamp : register(s0);

int2 calculateTileOffsets(uint packedTile, float autotileData[9])

{

uint autotileId = packedTile & 0x0F;

int2 vOffset;

vOffset.x = (packedTile >> 4) & 0x3fff;

vOffset.y = autotileData[autotileId];

vOffset.y += packedTile >> 18;

return vOffset;

}

struct VERTEX_IN

{

float posIndex: SV_POSITION0;

};

struct VERTEX_OUT

{

float4 vPos: SV_POSITION0;

};

VERTEX_OUT mainVS(VERTEX_IN In, uint VertexId : SV_VertexID, uint InstanceId : SV_InstanceID)

{

VERTEX_OUT Out;

uint offset = vOffset.w;

float indexX = VertexId % offset;

float indexY = floor(VertexId / offset);

Out.vPos.x = indexX * tileSize;

Out.vPos.y = indexY * tileSize;

Out.vPos.xy += vOffset.xy;

Out.vPos.x = Out.vPos.x * vTileOffset.z- 1.0f;

Out.vPos.y = -Out.vPos.y * vTileOffset.w + 1.0f;

indexX += vTileOffset.x;

indexY += vTileOffset.y;

Out.vPos.zw = float2(indexX, indexY);

return Out;

}

struct GEOMETRY_OUT

{

float4 vPos: SV_POSITION0;

float2 vTex0: TEXCOORD0;

float layer: TEXCOORD1;

};

[maxvertexcount(16)]

void mainGS(inout TriangleStream<GEOMETRY_OUT> outputStream, point VERTEX_OUT In[1])

{

GEOMETRY_OUT Out;if(In[0].vPos.z >= 0 && In[0].vPos.w >= 0 && In[0].vPos.z <= vTileHeight.y && In[0].vPos.w <= vTileHeight.z)

{

Out.vPos.w = 1.0f;

float numLayers = vLayers.x;

float numRealLayers = vLayers.z;

float layerOffset = vLayers.y;

for(float i = 0; i < numLayers; i++)

{

#if OPAGUE

float curr = (numLayers - 1) - i;

#else

float curr = i;

#endif

Out.layer = curr + layerOffset;

float2 tile = Tilemap.SampleLevel( PointClamp, float3(In[0].vPos.zw / vTileHeight.yz,(Out.layer + 0.5f)/numRealLayers), 0);

uint packedTile = asuint(tile.x);

if (packedTile != -1)

{

if(tile.y != 0.0f)

{

tile.y += vOffset.z;

}

else

{

tile.y = 0.5f - 0.0002f;

}

Out.vPos.z = 1.0f - (tile.y + curr * 0.0000001f);

float2 vTex0 = calculateTileOffsets(packedTile, autotileData) * vTileHeight.wx;

vTex0 += vInvTilesetSize.xy;

Out.vPos.x = In[0].vPos.x;

Out.vPos.y = In[0].vPos.y;

Out.vTex0.x = vTex0.x;

Out.vTex0.y = vTex0.y;

outputStream.Append(Out);

Out.vPos.x = In[0].vPos.x + vInvTileSize.x;

Out.vPos.y = In[0].vPos.y;

Out.vTex0.x = vTex0.x + vInvTileSize.z;

Out.vTex0.y = vTex0.y;

outputStream.Append(Out);

Out.vPos.x = In[0].vPos.x;

Out.vPos.y = In[0].vPos.y - vInvTileSize.y;

Out.vTex0.x = vTex0.x;

Out.vTex0.y = vTex0.y + vInvTileSize.w;

outputStream.Append(Out);

Out.vPos.x = In[0].vPos.x + vInvTileSize.x;

Out.vPos.y = In[0].vPos.y - vInvTileSize.y;

Out.vTex0.x = vTex0.x + vInvTileSize.z;

Out.vTex0.y = vTex0.y + vInvTileSize.w;

outputStream.Append(Out);

outputStream.RestartStrip();

}

}

}

}

struct PIXEL_OUT

{

float4 vColor: SV_Target0;

};

PIXEL_OUT mainPS(GEOMETRY_OUT In)

{

PIXEL_OUT Out;

Out.vColor = Image.Sample( PointClamp, In.vTex0);

Out.vColor.rgb += vColorTone;

#if OPAGUE

clip((Out.vColor.a <= 0.01f) ? -1 : 1);

#endif

if(activeLayer != -1.0f && In.layer != activeLayer)

{

Out.vColor.a = 0.25f;

}

return Out;

}

(Its being auto-generated, so sorry for the formatting - but I doubt anybody will be able to fully understand it without further explanation).

----------------------------------------------------------------

So, does anybody see any issue with the code in guestion? Is there something on intel-chips you have to be aware with uint-datatypes and/or bitshifting? The shaders have been working until I introduced that packing, so I guess I must eigther be doing something shady or there is some bug in the intel drivers? From what I can tell, the output that I get on the Intel cards for the function in guestion is int2(1, 0), and is the same across the entire tilemap. Any ideas what going on there?