This looks promising:

https://lisyarus.github.io/blog/graphics/2022/04/21/compute-blur.html

Not many can claim 25 years on the Internet! Join us in celebrating this milestone. Learn more about our history, and thank you for being a part of our community!

Instead using mipmaps you might want to get many samples from the high resolution image. Then you can shape the sample kernel like a disc or hexagon to get a higher quality bokeh effect.

Found some example here: https://catlikecoding.com/unity/tutorials/advanced-rendering/depth-of-field/

Taking many samples is expensive, but so is making mipmaps.

AMD has some downsampling code on GPU Open. They made it faster by generating multiple mip levels in the same shader. This causes many idle threads but reduces number of dispatches and barriers (the stuff OpenGL pretty much hides from you).

However, that's some work. So i'd try the bruteforce sampling first because it's probably fun and a good reference for later optimizations.

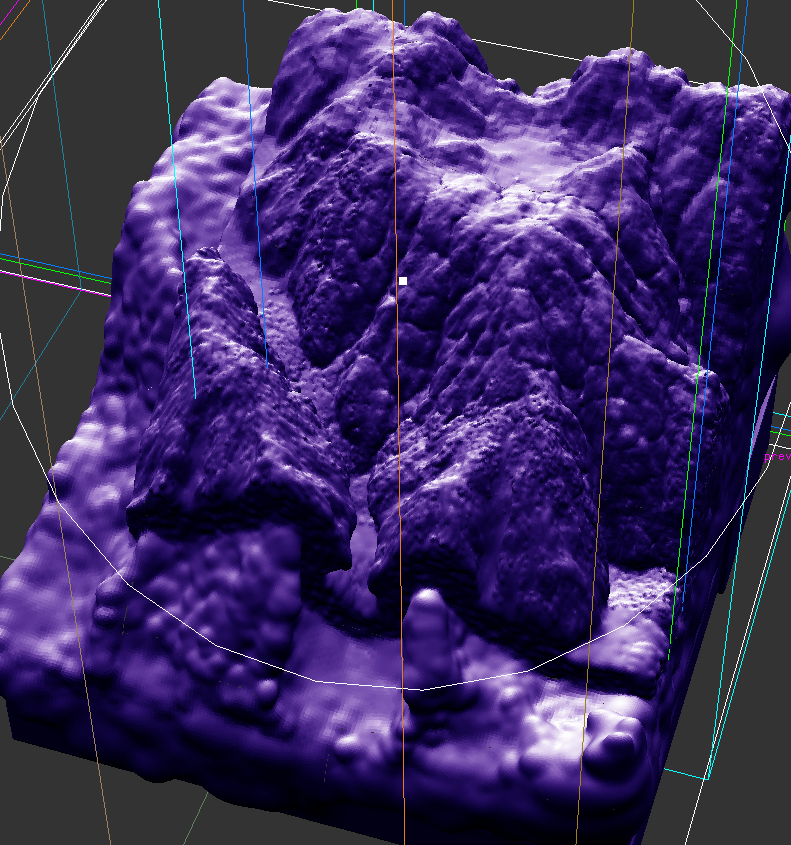

I've just visualized the processing of a terrain sim tile. Because it shows layers of detail, it causes some kind of DOF impression too:

So maybe, once we have fine grained LOD, it would help with rendering DOF as well… : )

Here's the shader that works. As you can see, it takes many samples. It runs wayyyy faster than the CPU version. As you will notice, I just use linear interpolation to determine how blurry a pixel is… there has to be a better way.

#version 430

uniform sampler2D depth_tex; // texture uniform

uniform sampler2D colour_tex; // texture uniform

uniform int img_width;

uniform int img_height;

vec2 img_size = vec2(img_width, img_height);

in vec2 ftexcoord;

layout(location = 0) out vec4 frag_colour;

// https://www.shadertoy.com/view/Xltfzj

void main()

{

const float pi_times_2 = 6.28318530718; // Pi*2

float directions = 4.0; // BLUR directions (Default 16.0 - More is better but slower)

float quality = 10.0; // BLUR quality (Default 4.0 - More is better but slower)

float size = 8.0; // BLUR size (radius)

vec2 radius = vec2(size/img_size.x, size/img_size.y);

vec4 blurred_colour = texture(colour_tex, ftexcoord);

for( float d=0.0; d<pi_times_2; d+= pi_times_2/directions)

for(float i=1.0/quality; i<=1.0; i+=1.0/quality)

blurred_colour += texture( colour_tex, ftexcoord + vec2(cos(d),sin(d))*radius*i);

// Output to screen

blurred_colour /= quality * directions;// - 15.0;

vec4 unblurred_colour = texture(colour_tex, ftexcoord);

float depth_colour = texture(depth_tex, ftexcoord).r;

depth_colour = pow(depth_colour, 100.0);

frag_colour.r = (1.0f - depth_colour) * unblurred_colour.r + depth_colour * blurred_colour.r;

frag_colour.g = (1.0f - depth_colour) * unblurred_colour.g + depth_colour * blurred_colour.g;

frag_colour.b = (1.0f - depth_colour) * unblurred_colour.b + depth_colour * blurred_colour.b;

frag_colour.a = 1.0;

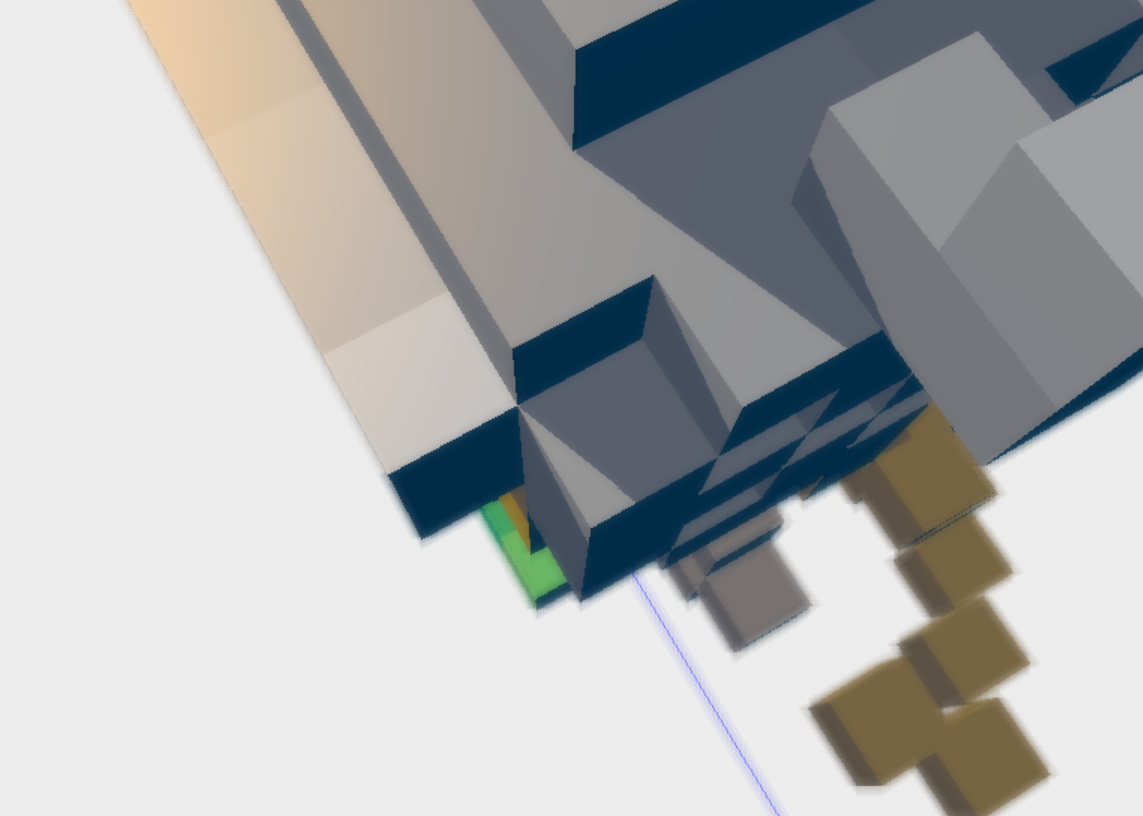

}Here is the final result:

taby said:

blurred_colour += texture( colour_tex, ftexcoord + vec2(cos(d),sin(d))*radius*i);

Be careful with transcendental math. Such operations are executed on a special unit which is narrow. E.g., a GPU CU may do 16 multiplications in one cycle on the wide SIMD unit, but only one sin in the same time. So execution is pipelined.

Though, afaik the same applies to a texture fetch, so maybe the sin is free anyway while waiting on the memory ops.

You could try to put the sample offsets into an array, which then goes to on chip constant ram. I guess that's a win but not sure how much.

Probably you want to sample a disc not just a circle (so samples are in the inside area as well). And eventually you want to rotate the sample kernel randomly per pixel. Both should help to break artificial patterns and artifacts.

You may also want to try how it works with MSAA early (in case you want to use it). Likely this adds some additional extra cost on getting textures from the frame buffer, which may be bigger than expected.

If you use TAA instead, you can accept noise from sampling as TAA will smooth it out. Noise is always better than banding, but especially so if we use TAA.

And in case you want motion blur, keep in mind you could do both at once for no real extra cost, by alternating the sample positions and/or weights. SSAO also could benefit from the sampling results, eventually. Ideally we want all post-processing in a single pass. Only after considering all of this we can properly decide on using mips and how many of them we need, imo.

The most interesting about DOF to me is this problem:

Imagine a scene where the focus is at some wire netting fence. We see trees, houses, and the sky in the distance behind the fence.

In this case we want to avoid that the sharp fence bleeds into the blurred background, which looks terrible but was common in the PS3 era.

Likely there should be some weighting by depth. If the sampled pixel is in focus, it should have a lower weight. Something like that i guess. Never saw this mentioned in tutorials.

Imo that's important to get right. Otherwise it's better to have no DOF at all, because such artifacts look so awful.

I agree with Joe.

I forgot to mention, to use MSAA in FBOs you will need glTexImage2DMultisample() and enable GL_MULTISAMPLE. Or you could use FXAA, since you can just put that right before the blurring in the same shader without much hassle.

taby said:

JoeJ do you recommend that I pass in the sine and cosine values in as a texture?

No. Reading textures means accessing VRAM, which may be cached on chip, but if possible i would avoid memory access in inner loops. I assume sin is still faster than that.

There are only two kinds of on chip memory which we can assume is fast to access: LDS memory, which is available only to compute, and constant ram. Constant ram is filled when a new shader is dispatched, and then is read only for that shader.

Iirc, in OpenGL you can put stuff to constant ram using ‘shader unifroms’, often used to hold things like light positions or various matrices.

Or you can write a small LUT in shader code directly as an array. The Bokeh example i have linked does this.

Because you can't avoid the texture fetches in the inner loop, there might be no noticeable win. But just to mention.

Bastian von Halem said:

I agree with Joe.

I forgot to mention, to use MSAA in FBOs you will need glTexImage2DMultisample() and enable GL_MULTISAMPLE. Or you could use FXAA, since you can just put that right before the blurring in the same shader without much hassle.

Do you have any code or tutorials to recommend? I am trying to do MSAA with glTexImage2DMultisample and glEnable(GL_MULTISAMPLE), but I'm having no luck yet.