Wow, I can't belive to actually say that, but - I'm almost finished with the rewrite of my visual-scripting backend. I've only got one thing left- and unfortunately its a spicy one.

So, unless you've read a few of my previous thread on that topic, here's a quick heads-up: I'm writing a custom interpreted language, which works a bit like Java/C# (without any JIT). You have a stack, you push and pop values, you have local variables that can be addresses in relation to the current “frame”-pointer. Its also heavy tied to C++ right now - arrays are represented as std::vector (essentially), string as std::wstring and custom structs are supported.

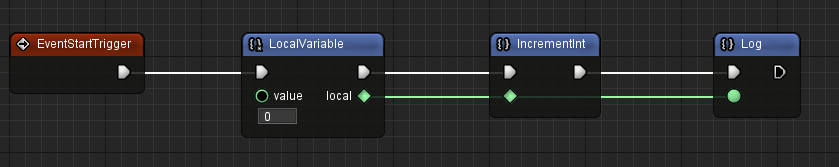

Now to the main point of this thread. My visual script supports the concept of what is essential coroutines - a function that can yield execution, and resume it at a later point in time. While I have a general idea on how I want to handle this, I have some trouble with exactly where to store things when coroutines are involved. So lets look a quick example to see whatI mean. Here's some “code” from the VS:

Pretty simple stuff. Create a local variable, increment it, and the log the result “1”. Without any optimization, that would result in the following instructions:

AddSpace 40

PushIntConst 0

StoreWord %rbp-4

LoadAsRef %rbp-4

IncrementInt

LoadWord %rbp-4

CallNative 40, IntToString[Inline]

CallNative 40, LogText[Inline]

DestroyString %rbp-40

Pop 40

ReturnI hope this is somewhat comprehensive. Reserve space for the local variables, init the first variable at “frame-pointer - 4 bytes” to “0” , increment it, convert it to a temporary string (stored at frame-pointer - 40) and log it. Then, destroy the string, remove the space allocated for the local variables from the stack and return. So far, so good.

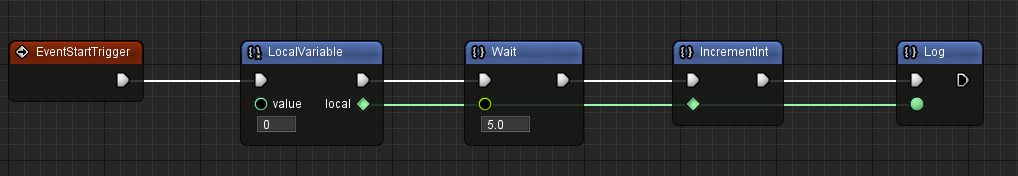

Now, lets look at the case of a coroutine:

Now things are getting a lot more interesting - but lets focus one one thing that I'm currently having trouble with: Where do I store the data of the call? Previously, I would store the first local variable after the frame-pointer. Now that would not work by itself, since once The “wait” is reached, the function will be suspended, the stack needs to be reused by other functions, and in the next update, I need to try to resume the function until the 5 seconds pass, after which I need to increment the local variable and log it.

Now I now that usually, coroutines allocate a special frame where they put their data. However, I'm having one main problem with that: My visual-script doesn't need coroutines to be specially designated. What I mean is, you can simply place a suspension-call (like Wait) wherever you want, and it will become a coroutine. Combine that with me having virtual functions, means that I cannot realistically know whether the entry-point into my bytecode will be a coroutine or not (I could sometimes tell that it won't be for sure, but as soon as a virtual function is used, this guarantee is out of the window).

Lastly, as you can see by the usage of the reference-type (the <>-pins), I can pass references to data around, which are pretty much just c++ references/pointers - which means that the address of elements needs to be preserved between suspenion and resume of coroutines as well.

_____________________________

Now with all that said - what options do I really have? I can think of two main ways to handle this, but both have down-sides:

- Store everything on the stack as usual. When a suspension-point is reached the first time, I allocate a frame on the side, and copy the whole content of the stack to that frame. On resume, I copy the whole frame back to exactly the same location that it was before suspension. This is at least correct, based on my constraints on what custom types can or can't do. Also, at the point where the resume happens, the stack is guaranteed to be empty. There's just a few downsides:

- Its O(N) in the size of the stack - even though the copies only happen for coroutines, as I said I use them quite frequently.

- Having to copy to the exact stack-location (to preverse addresses) means that you could eventually just run out of stack-space, without the stack actually becoming full (its not always the full stack that becomes preversed, when you call a bytecode-method from some native code that was called from bytecode, to put it simply)

- Technically, if I took a reference to a local from that method, store it in some native method and use it while the coroutine is suspended, I get madness. This is not a realisitic issue (and I could probably prevent this in the compiler), but its still not that nice.

- My other idea - instead of having one stack, I have a pool of stacks which are all a bit smaller. When a call becomes suspended, I simply put that stack on the side, put the next one as “active”, and on resume I only need to put the other stack back as “active” and mark it as “free” when the coroutine is done. This has the upside of being O(1) independant of size of stack, also there is no issue with invalid references running out of stack-space like in scenario 1). However, this also has a few downsides:

- Since the used stack is now no longer fixed, every call now essentially needs an additional indirection, essentially pessimising the entire interpreter (while the solution above would have only incurred overhead when a coroutine is used)

- Since a stack cannot be resized, this solution has serious issues with memory. The language should be able to run many coroutines at the same time - perhaps 100s or 1000s. If each of those needs to have a separate stack, it could easily use a few hunderd megabytes, if not gigabytes (currently stack is ~3MB, I could make it only 0.5-1MB for this option, but then I can see myself running into stack-overflows).

So yeah, thats where I'm at. I did initially plan to go for option 1), but I'm not so sure anymore. I think option 3) would be to just force coroutines to be declared explicitely - though it kind of goes against the idea that I originally had with my visual-scripting, where those kind of things should be very easy and quick for prototyping and writing gameplay-scripts and so on.

----------------------------

Sorry, this has been very long, but its also a hard question to put out there without any context. I hope everything has been mostly understandable - if there's still questions, please let me know. Otherwise, does anybody have some clever idea that I've been missing so far? I tried looking at the generated assembly for c++-coroutines, but its quite a lot of boilerplate, not the easy to understand (and also it does not solve my core problem as coroutines in c++ have to be declared for a method).

Thanks in advance for any advice!